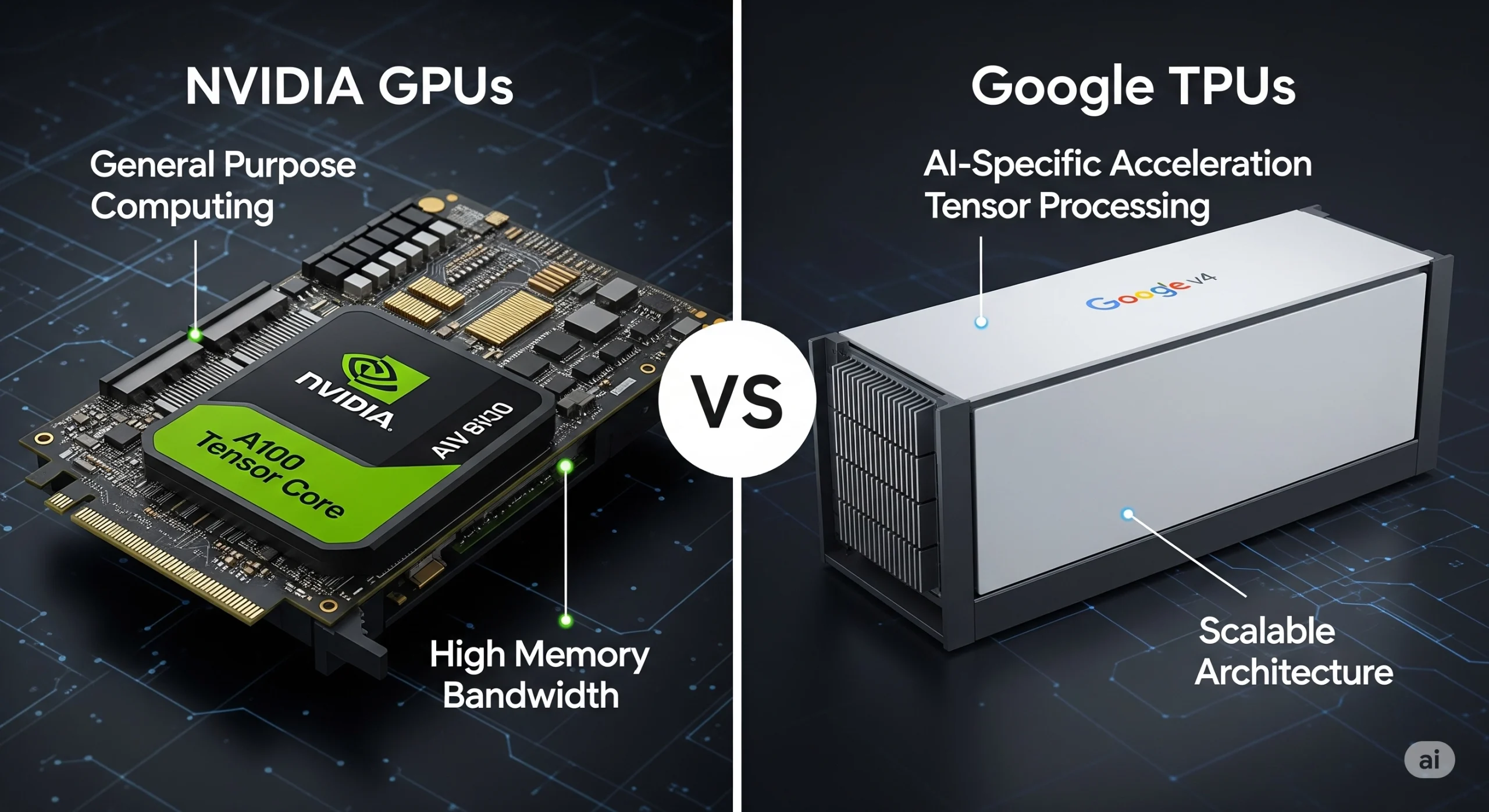

NVIDIA GPU vs. Google TPU

The two giants driving the AI revolution: NVIDIA’s general-purpose GPU and Google’s specialized TPU. This site interactively explores their differences in design philosophy, architecture, performance, and business strategy to decipher the future of AI computing.

Design Philosophy: Generalist vs. Specialist

The fundamental difference between GPUs and TPUs lies in their origins. GPUs “adapted” from graphics processing to general-purpose computing, while TPUs were “designed” to solve Google’s specific problems. This distinction defines their character.

NVIDIA GPU: King of Versatility

Like a “Swiss Army knife,” its strength is the flexibility to handle any parallel computing task. Its capabilities, honed in the world of graphics, were unleashed for scientific computing and AI by CUDA.

1999: GeForce 256

Hardware T&L as the “world’s first GPU.”

2001: GeForce 3

Introduced programmable shaders, opening the door to general-purpose computing.

2007: CUDA

Made GPGPU accessible to everyone, laying the foundation for the AI era.

Google TPU: Seeker of Specialization

Like a “surgeon’s scalpel,” it specializes in a single purpose: neural network computation. Developed from scratch to meet the explosive computational demands of Google’s data centers.

2015: TPUv1

Specialized in inference, achieving incredible power efficiency.

2017: TPUv2

Added training capabilities, enabling massive scaling with “TPU Pods.”

2018-Present: TPUv3+

Pursued performance and scalability, making giant models possible.

Architecture: Deconstructing the Silicon

Let’s look inside the computing engines. GPUs take an “extension” approach, adding specialized cores to a collection of general-purpose cores. TPUs take a “specialization” approach, featuring a massive computation unit optimized for matrix operations.

NVIDIA: CUDA Cores & Tensor Cores

A hybrid configuration of numerous general-purpose “CUDA Cores” and specialized “Tensor Cores” that accelerate AI calculations.

Flexible CUDA Cores and powerful Tensor Cores coexist.

Google: Systolic Array (MXU)

A “Systolic Array” specialized for matrix operations. Data flows like a wave, enabling ultra-efficient computation.

Data flows between units, minimizing memory access.

The Performance Gauntlet

We compare their capabilities using industry-standard “MLPerf” benchmarks and efficiency metrics. Is it NVIDIA for absolute performance, or Google for cost efficiency? Switch the data view with the buttons below to see the difference.

Ecosystem: The Battle for Developers’ Hearts

The true value of hardware is unlocked by software. NVIDIA has built an open “CUDA empire,” attracting all developers. Meanwhile, Google offers peak efficiency in its “vertical kingdom,” optimized for its own cloud.

NVIDIA: Horizontal Empire & “CUDA Moat”

For anyone, anywhere. A vast library and a huge community form a powerful barrier to entry (a “moat”) that competitors cannot easily overcome.

Google: Vertical Kingdom & Optimized Stack

Exclusive to Google Cloud. Tightly integrating hardware, software, and cloud to deliver the best performance and TCO.

Conclusion: Two Paths to Different Peaks

The AI hardware battle is not a winner-take-all game. NVIDIA will continue to dominate the broader market with its flexibility and ecosystem, while Google carves out its own niche with a powerful TCO argument for specific, large-scale workloads.

Future Outlook

The battlefield is shifting from model “training” to “inference,” which accounts for the majority of operational costs. This favors the power-efficient TPU, but NVIDIA is also enhancing its inference performance and countering with its vast CUDA ecosystem. The final choice will be a strategic one, balancing absolute performance, cost, and ease of development.

コメント